I am a research associate and Ph.D. student at the Humanoid Robots Lab headed by Prof. Maren Bennewitz at the University of Bonn, Germany. My research interests cover personalized human-aware robot navigation, reinforcement learning, and human-robot interaction. I hold a Master’s degree in physics from the University of Goettingen, Germany, and am a member of the Lamarr Institute for Machine Learning and Artificial Intelligence and the Center for Robotics in Bonn, Germany.

Research Spotlight

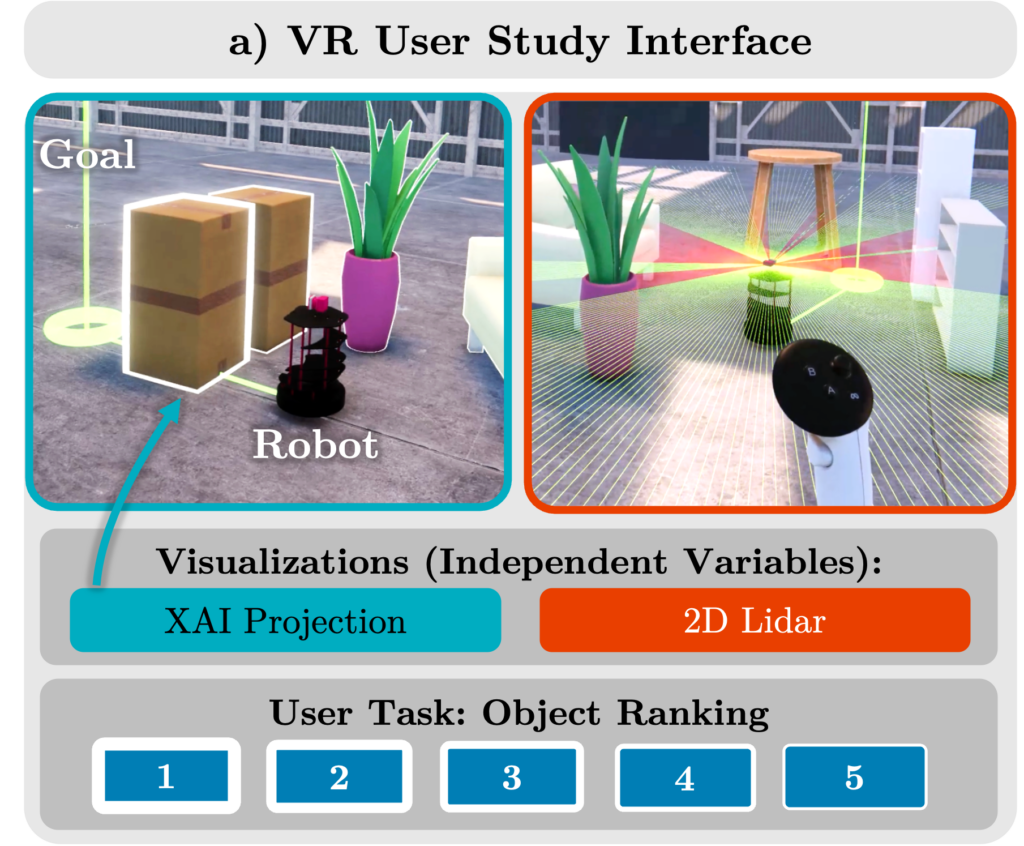

Immersive Explainability: Visualizing Robot Navigation Decisions through XAI Semantic Scene Projections in Virtual Reality

J. de Heuvel, S. Müller, M. Wessels, A. Akhtar, C. Bauckhage, and M. Bennewitz

Submitted for publication. Arxiv preprint, 2025.

End-to-end robot policies achieve high performance through neural networks trained via reinforcement learning (RL). Yet, their black box nature and abstract reasoning pose challenges for human-robot interaction (HRI), because humans may experience difficulty in understanding and predicting the robot’s navigation decisions, hindering trust development. We present a virtual reality (VR) interface that visualizes explainable AI (XAI) outputs and the robot’s lidar perception to support intuitive interpretation of RL-based navigation behavior. By visually highlighting objects based on their attribution scores, the interface grounds abstract policy explanations in the scene context. This XAI visualization bridges the gap between obscure numerical XAI attribution scores and a human-centric semantic level of explanation. A within-subjects study with 24 participants evaluated the effectiveness of our interface for four visualization conditions combining XAI and lidar. Participants ranked scene objects across navigation scenarios based on their importance to the robot, followed by a questionnaire assessing subjective understanding and predictability. Results show that semantic projection of attributions significantly enhances non-expert users‘ objective understanding and subjective awareness of robot behavior. In addition, lidar visualization further improves perceived predictability, underscoring the value of integrating XAI and sensor for transparent, trustworthy HRI.

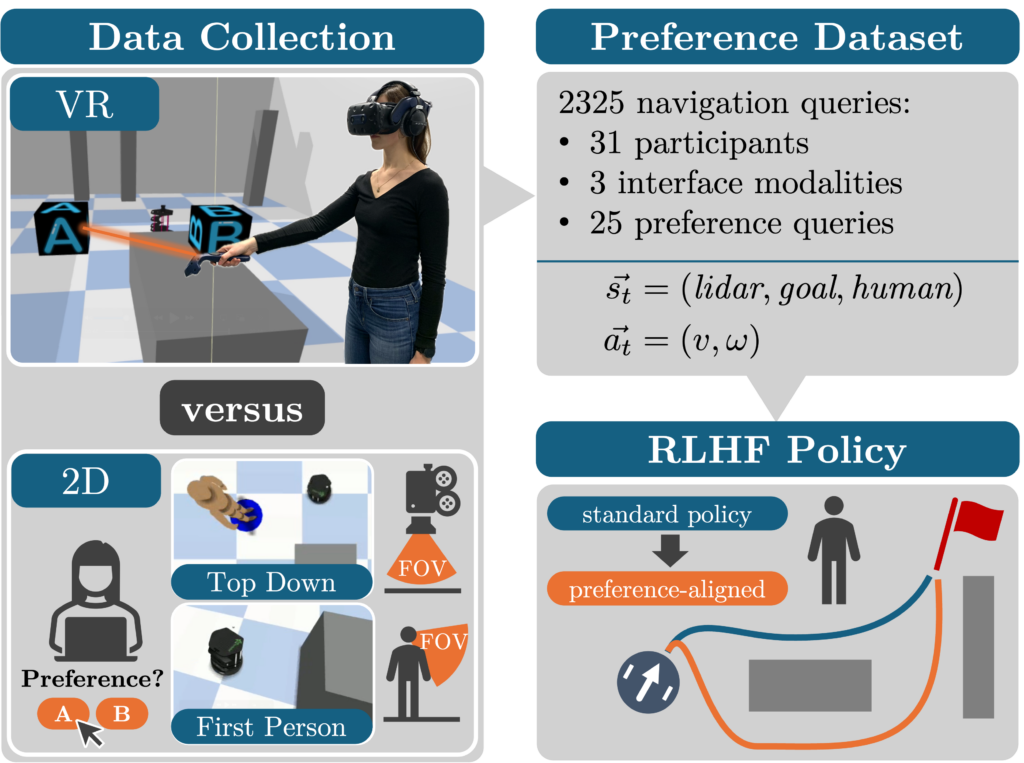

The Impact of VR and 2D Interfaces on Human Feedback in Preference-Based Robot Learning

J. de Heuvel, D. Marta, S. Holk, I. Leite, and M. Bennewitz

Submitted for publication. Arxiv preprint, 2025.

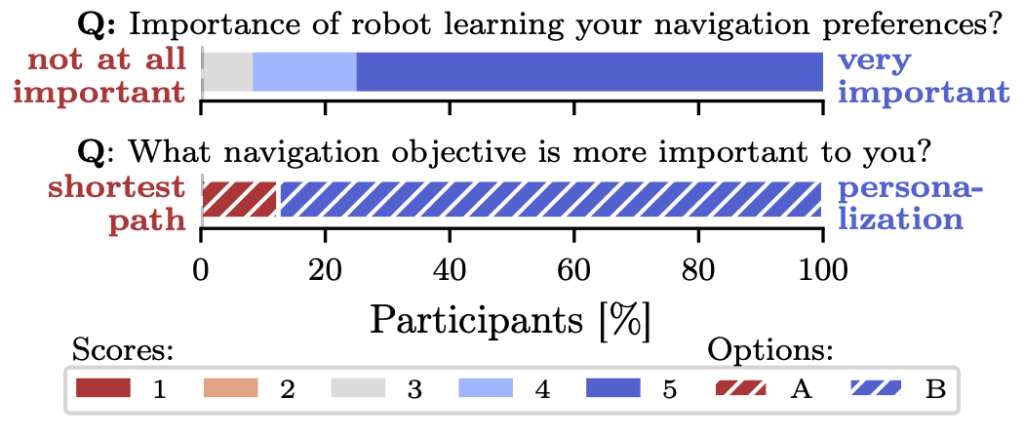

Aligning robot navigation with human preferences is essential for ensuring comfortable and predictable robot movement in shared spaces, facilitating seamless human-robot coexistence. While preference-based learning methods, such as reinforcement learning from human feedback (RLHF), enable this alignment, the choice of the preference collection interface may influence the process. Traditional 2D interfaces provide structured views but lack spatial depth, whereas immersive VR offers richer perception, potentially affecting preference articulation. This study systematically examines how the interface modality impacts human preference collection and navigation policy alignment. We introduce a novel dataset of 2,325 human preference queries collected through both VR and 2D interfaces, revealing significant differences in user experience, preference consistency, and policy outcomes. Our findings highlight the trade-offs between immersion, perception, and preference reliability, emphasizing the importance of interface selection in preference-based robot learning. The dataset will be publicly released to support future research.

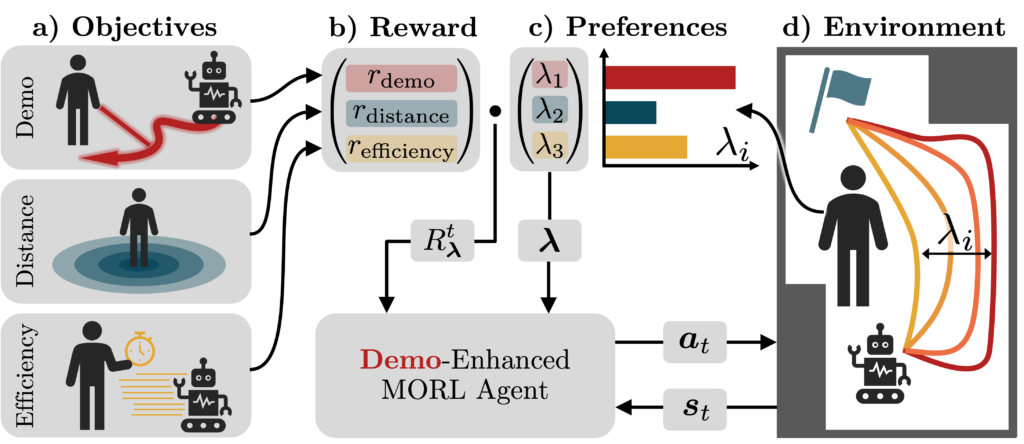

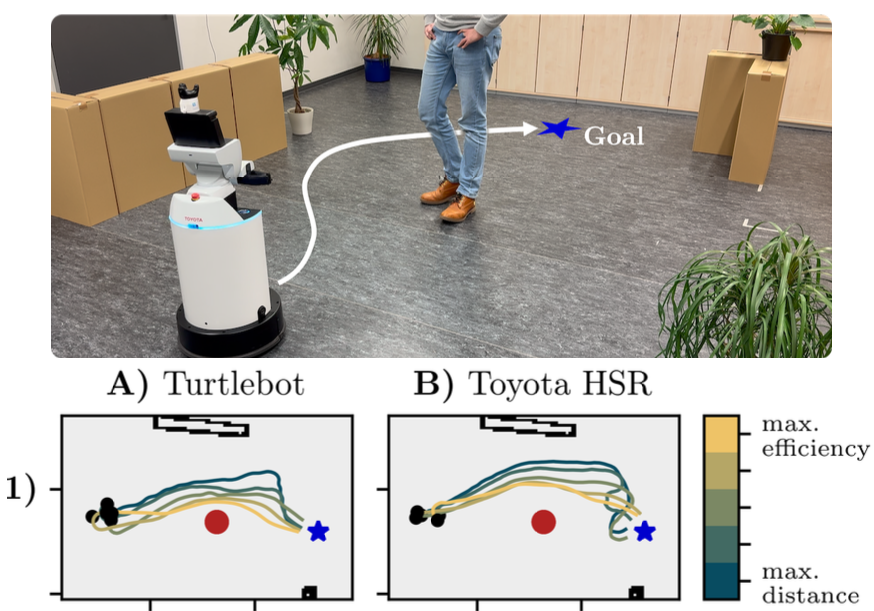

Demonstration-Enhanced Adaptive Multi-Objective Robot Navigation

J. de Heuvel, T. Sethuraman, and M. Bennewitz

Submitted for publication. Arxiv preprint, 2025.

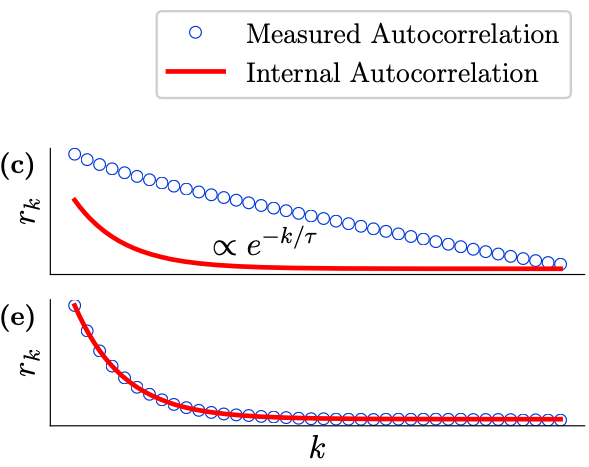

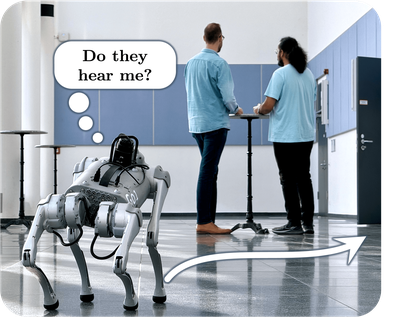

Preference-aligned robot navigation in human environments is typically achieved through learning-based approaches, utilizing user feedback or demonstrations for personalization. However, personal preferences are subject to change and might even be context-dependent. Yet traditional reinforcement learning (RL) approaches with static reward functions often fall short in adapting to varying user preferences, inevitably reflecting demonstrations once training is completed. This paper introduces a structured framework that combines demonstration-based learning with multi-objective reinforcement learning (MORL). To ensure real-world applicability, our approach allows for dynamic adaptation of the robot navigation policy to changing user preferences without retraining. It fluently modulates the amount of demonstration data reflection and other preference-related objectives. Through rigorous evaluations, including a baseline comparison and sim-to-real transfer on two robots, we demonstrate our framework’s capability to adapt to user preferences accurately while achieving high navigational performance in terms of collision avoidance and goal pursuance.

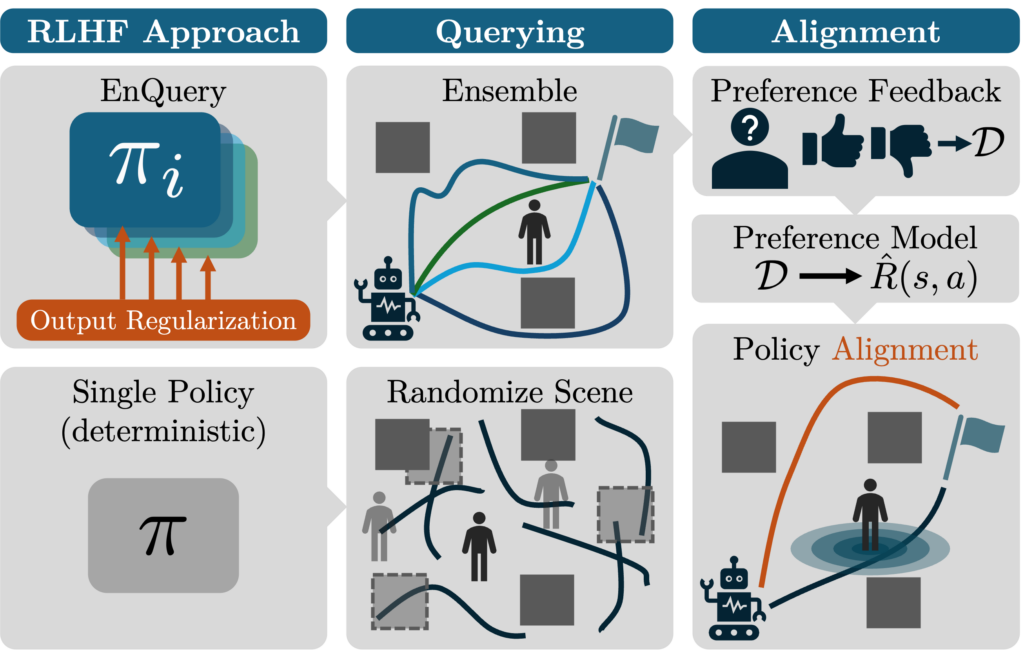

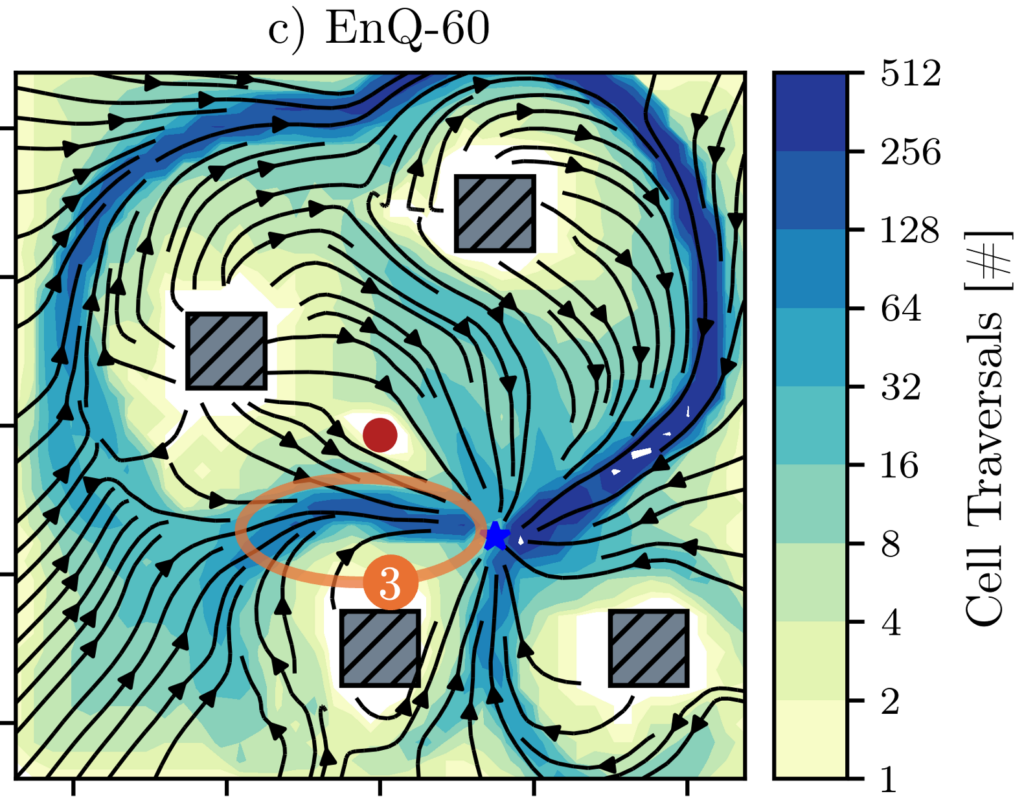

EnQuery: Ensemble Policies for Diverse Query-Generation in Preference Alignment of Robot Navigation

J. de Heuvel, F. Seiler, and M. Bennewitz

Proceedings of the IEEE International on Human & Robot Interactive Communication (RO-MAN), 2024.

To align mobile robot navigation policies with user preferences through reinforcement learning from human feedback (RLHF), reliable and behavior-diverse user queries are required. However, deterministic policies fail to generate a variety of navigation trajectory suggestions for a given navigation task configuration. We introduce EnQuery, a query generation approach using an ensemble of policies that achieve behavioral diversity through a regularization term. For a given navigation task, EnQuery produces multiple navigation trajectory suggestions, thereby optimizing the efficiency of preference data collection with fewer queries. Our methodology demonstrates superior performance in aligning navigation policies with user preferences in low-query regimes, offering enhanced policy convergence from sparse preference queries. The evaluation is complemented with a novel explainability representation, capturing full scene navigation behavior of the mobile robot in a single plot.

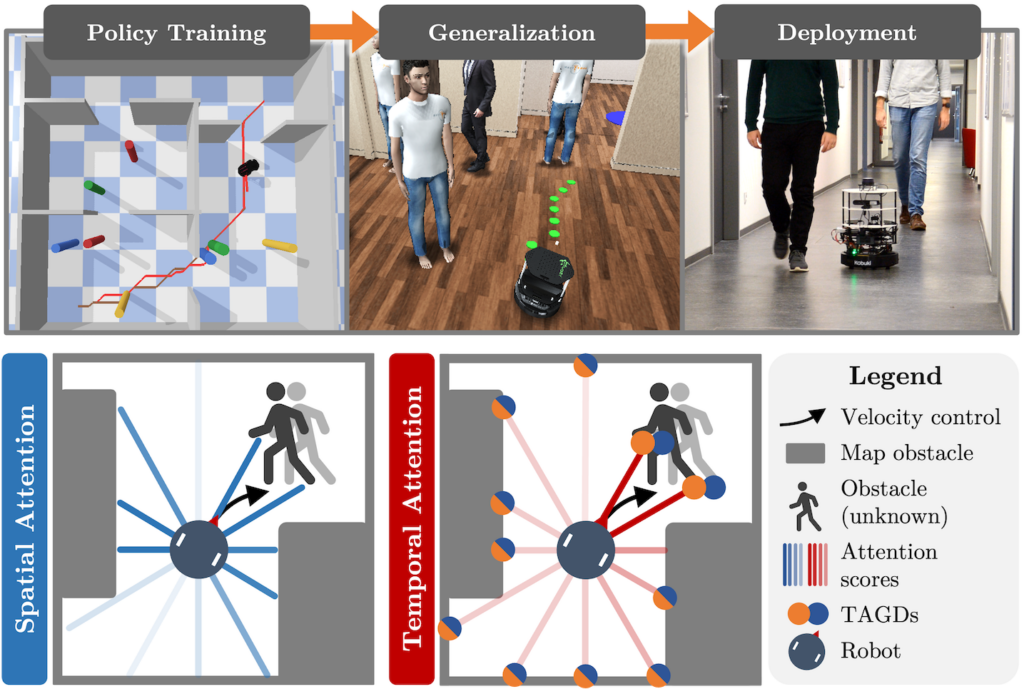

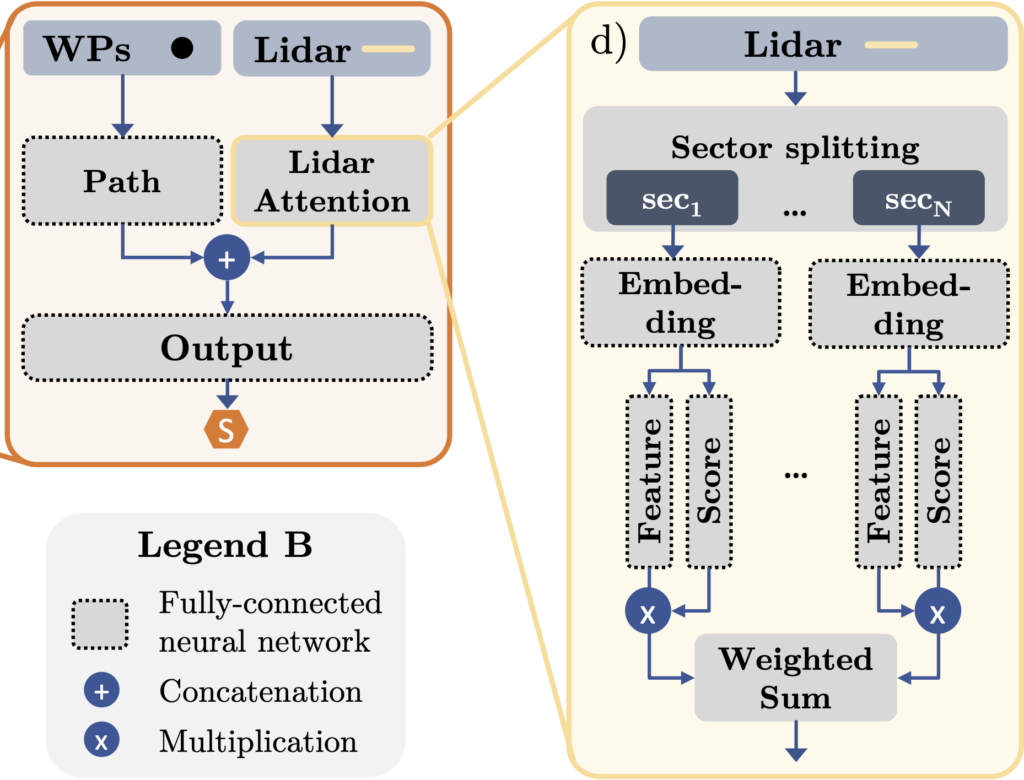

Spatiotemporal Attention Enhances Lidar-Based Robot Navigation in Dynamic Environments

J. de Heuvel, X. Zeng, W. Shi, T. Sethuraman, and M. Bennewitz

IEEE Research and Automation Letters, 2024.

Foresighted robot navigation in dynamic indoor environments with cost-efficient hardware necessitates the use of a lightweight yet dependable controller. So inferring the scene dynamics from sensor readings without explicit object tracking is a pivotal aspect of foresighted navigation among pedestrians. In this paper, we introduce a spatiotemporal attention pipeline for enhanced navigation based on 2D lidar sensor readings. This pipeline is complemented by a novel lidar-state repre- sentation that emphasizes dynamic obstacles over static ones. Subsequently, the attention mechanism enables selective scene perception across both space and time, resulting in improved overall navigation performance within dynamic scenarios. We thoroughly evaluated the approach in different scenarios and simulators, finding good generalization to unseen environments. The results demonstrate outstanding performance compared to state-of-the-art methods, thereby enabling the seamless deployment of the learned controller on a real robot.

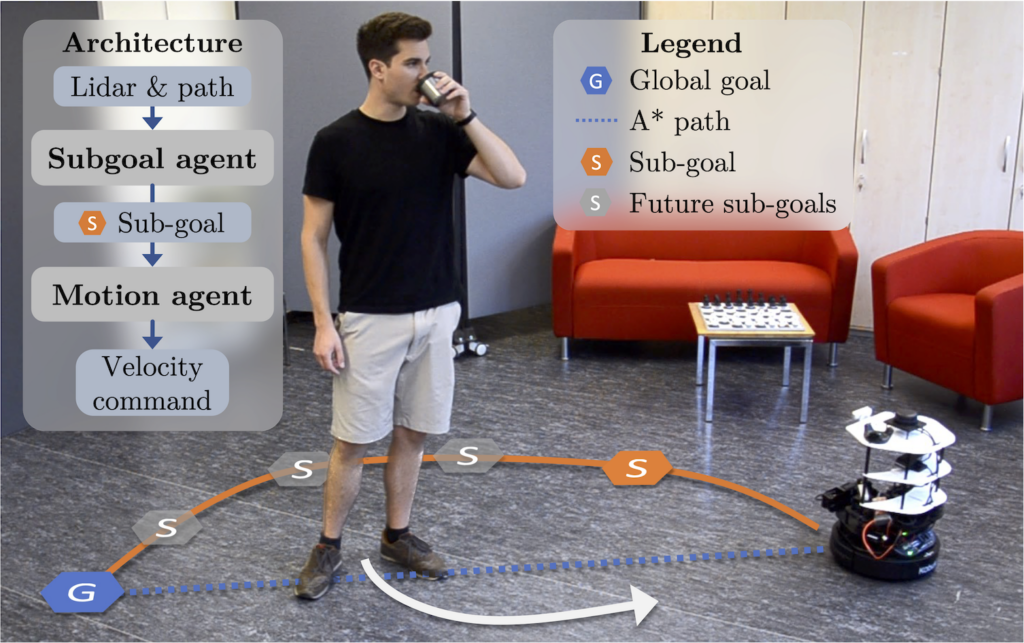

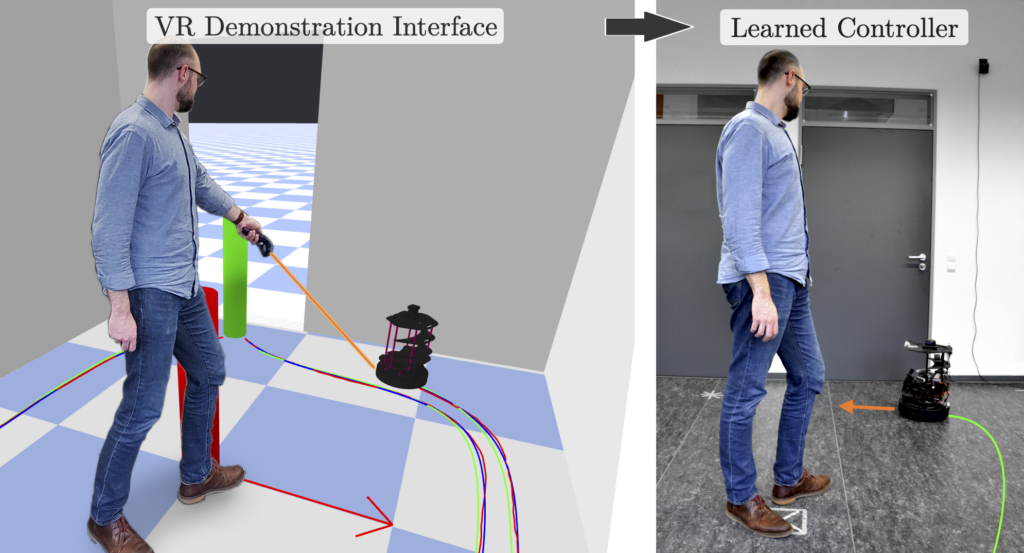

Subgoal-Driven Navigation in Dynamic Environments Using Attention-Based Deep Reinforcement Learning

J. de Heuvel, W. Shi, X. Zeng, M. Bennewitz

Arxiv preprint, 2023. Accepted for publication at the IEEE/RSJ International Conference on Advanced Robotics (ICAR 2023).

Collision-free, goal-directed navigation in environments containing unknown static and dynamic obstacles is still a great challenge, especially when manual tuning of navigation policies or costly motion prediction needs to be avoided. In this paper, we therefore propose a subgoal-driven hierarchical navigation architecture that is trained with deep reinforcement learning and decouples obstacle avoidance and motor control. In particular, we separate the navigation task into the prediction of the next subgoal position for avoiding collisions while moving toward the final target position, and the prediction of the robot’s velocity controls. By relying on 2D lidar, our method learns to avoid obstacles while still achieving goal-directed behavior as well as to generate low-level velocity control commands to reach the subgoals. In our architecture, we apply the attention mechanism on the robot’s 2D lidar readings and compute the importance of lidar scan segments for avoiding collisions. As we show in simulated and real-world experiments with a Turtlebot robot, our proposed method leads to smooth and safe trajectories among humans and significantly outperforms a state-of-the-art approach in terms of success rate.

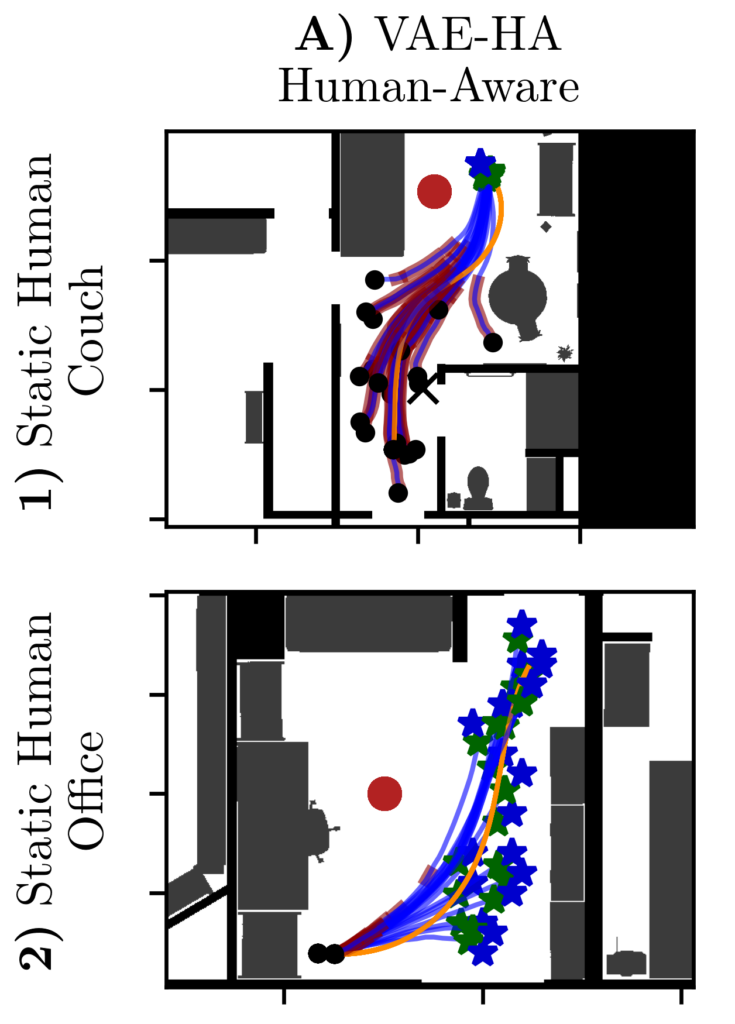

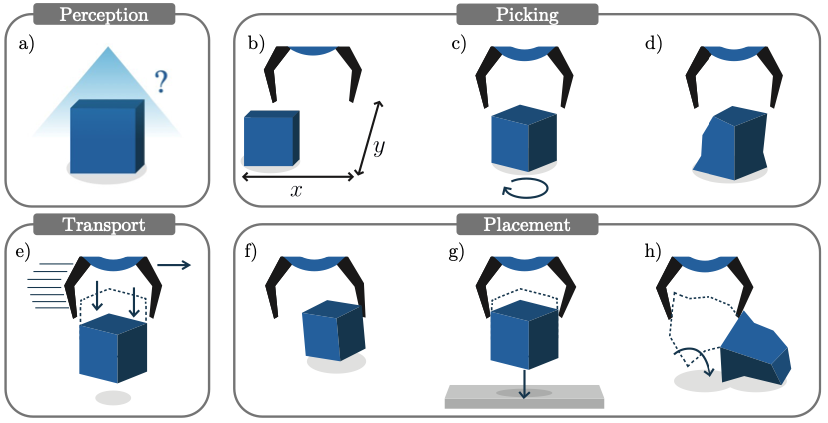

Learning Depth Vision-Based Personalized Robot Navigation From Dynamic Demonstrations in Virtual Reality

J. de Heuvel, N. Corral, B. Kreis, J. Conradi, A. Driemel, M. Bennewitz

Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2023.

For the best human-robot interaction experience, the robot’s navigation policy should take into account personal preferences of the user. In this paper, we present a learning framework complemented by a perception pipeline to train a depth vision-based, personalized navigation controller from user demonstrations. Our virtual reality interface enables the demonstration of robot navigation trajectories under motion of the user for dynamic interaction scenarios. The novel perception pipeline enrolls a variational autoencoder in combination with a motion predictor. It compresses the perceived depth images to a latent state representation to enable efficient reasoning of the learning agent about the robot’s dynamic environment. In a detailed analysis and ablation study, we evaluate different configurations of the perception pipeline.

To further quantify the navigation controller’s quality of personalization, we develop and apply a novel metric to measure preference reflection based on the Fréchet Distance. We discuss the robot’s navigation performance in various virtual scenes and demonstrate the first personalized robot navigation controller that solely relies on depth images.

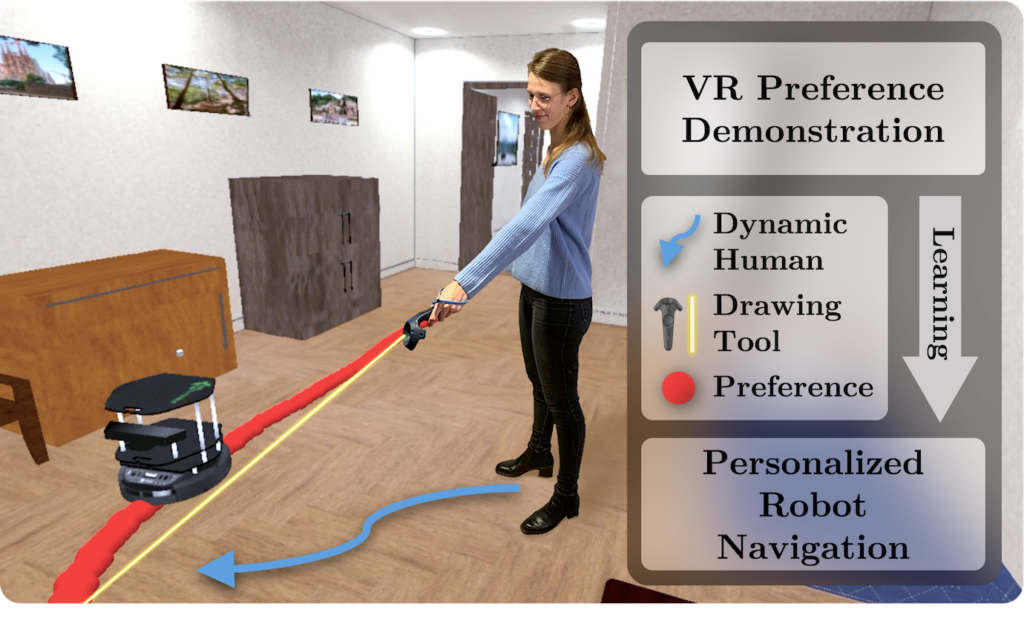

Learning Personalized Human-Aware Robot Navigation Using Virtual Reality Demonstrations from a User Study

J. de Heuvel, N. Corral, L. Bruckschen, and M. Bennewitz

Proceedings of the IEEE International on Human & Robot Interactive Communication (RO-MAN), 2022.

For the most comfortable, human-aware robot navigation, subjective user preferences need to be taken into account. This paper presents a novel reinforcement learning framework to train a personalized navigation controller along with an intuitive virtual reality demonstration interface. The conducted user study provides evidence that our personalized approach significantly outperforms classical approaches with more comfortable human-robot experiences. We achieve these results using only a few demonstration trajectories from non-expert users, who predominantly appreciate the intuitive demonstration setup. As we show in the experiments, the learned controller generalizes well to states not covered in the demonstration data, while still reflecting user preferences during navigation. Finally, we transfer the navigation controller without loss in performance to a real robot.

Characterizing spreading dynamics of subsampled systems with nonstationary external input

J. de Heuvel, J. Wilting, M. Becker, V. Priesemann, and J. Zierenberg

Phys. Rev. E 102, 040301(R), 2020.

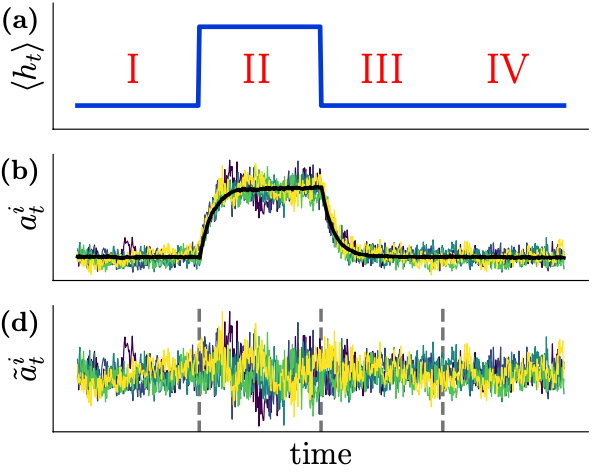

Many systems with propagation dynamics, such as spike propagation in neural networks and spreading of infectious diseases, can be approximated by autoregressive models. The estimation of model parameters can be complicated by the experimental limitation that one observes only a fraction of the system (subsampling) and potentially time-dependent parameters, leading to incorrect estimates. We show analytically how to overcome the subsampling bias when estimating the propagation rate for systems with certain nonstationary external input. This approach is readily applicable to trial-based experimental setups and seasonal fluctuations as demonstrated on spike recordings from monkey prefrontal cortex and spreading of norovirus and measles.

Other Publications

Auditory Localization and Assessment of Consequential Robot Sounds: A Multi-Method Study in Virtual Reality

M. Wessels, J. de Heuvel, L. Müller, A.L. Maier, M. Bennewitz, J. Kraus

Submitted for publication. Arxiv preprint, 2025.

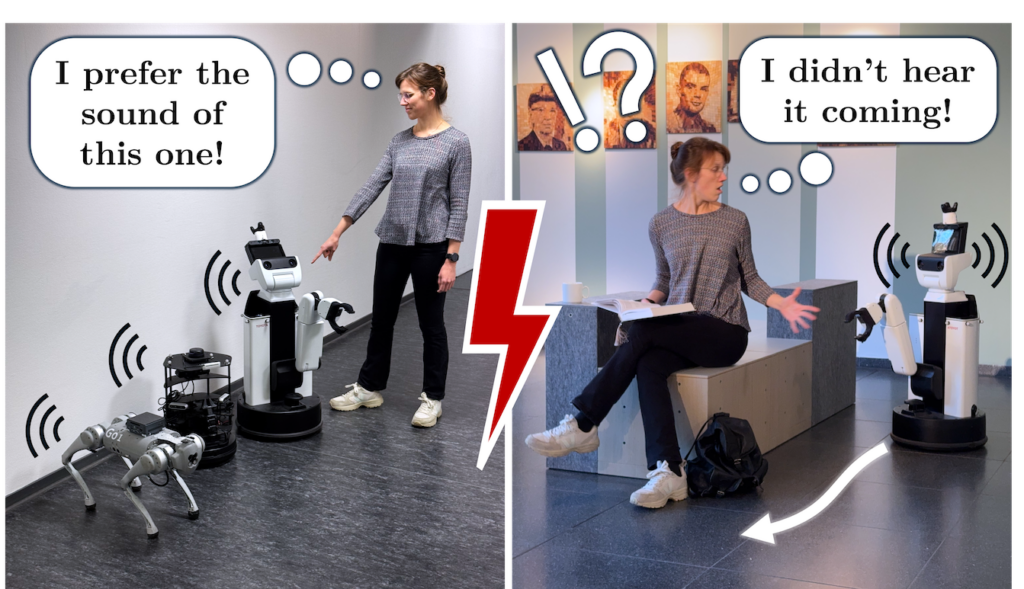

Sound Matters: Auditory Detectability of Mobile Robots

S. Agrawal, M. Wessels, J. de Heuvel, J. Kraus, M. Bennewitz

Proceedings of the IEEE International on Human & Robot Interactive Communication (RO-MAN), 2024.

RHINO-VR Experience: Teaching Mobile Robotics Concepts in an Interactive Museum Exhibit

E. Schlachhoff, N. Dengler, L. Van Holland, P. Stotko, J. de Heuvel, R. Klein, M. Bennewitz

Proceedings of the IEEE International on Human & Robot Interactive Communication (RO-MAN), 2024.

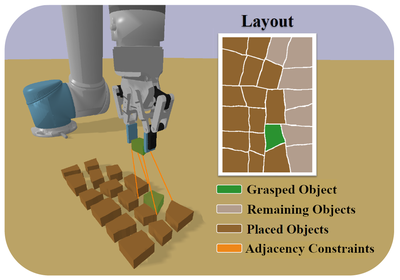

Compact Multi-Object Placement Using Adjacency-Aware Reinforcement Learning

B. Kreis, N. Dengler, J. de Heuvel, R. Menon, H. D. Perur, M. Bennewitz

Proceedings of the IEEE-RAS International Conference on Humanoid Robots (Humanoids), 2024.

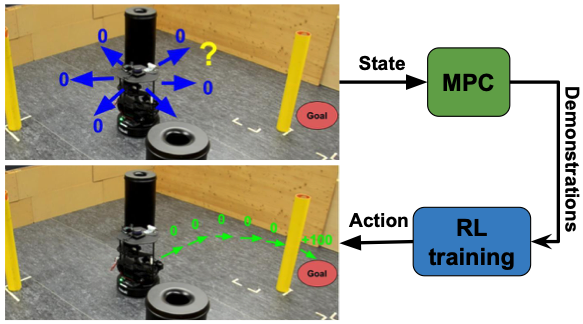

Handling Sparse Rewards in Reinforcment Learning Using Model Predictive Control

M. Dawood, N. Dengler, J. de Heuvel, and M. Bennewitz

Proceedings of the IEEE International Conference on Robotics & Automation (ICRA), 2023.

Reactive Correction of Object Placement Errors for Robotic Arrangement Tasks

B. Kreis, R. Menon, B. K. Adinarayan, J. de Heuvel, and M. Bennewitz

Proceedings of the International Conference on Intelligent Autonomous Systems (IAS), 2023.

Talks, Presentations & Workshops

1st German Robotics Conference

Poster Presentation: Interactive XAI for Reinforcement Learning Robots in Virtual Reality

Nürnberg, Germany, March 2025.

Advances in Social Navigation:

Planning, HRI and Beyond

Co-organizer of the workshop.

ICRA, Atlanta, May 2025.

UnsolvedSocialNav Workshop

Co-organizer of the workshop.

RSS, Delft, July 2024.

DFKI Saarland

Learning preference-aligned robot navigation from VR demonstrations using deep reinforcement learning.

Presentation, October 2023.

SEANavBench Workshop – IROS 2023

Personalized Human-Robot Interaction: Learning Depth Vision-Based Navigation with User Preferences.

Poster Presentation, October 2023.

Future of AI Summit – RWTH AI Week 2023

Exchange event about AI-related topics with the general public, industry and science community, organized under the auspices of the Alexander von Humboldt Foundation.

Poster Presentation, September 2023.

CVPR 2023 Workshop on 3D Vision and Robotics

Learning Depth Vision-Based Personalized Robot Navigation Using Latent State Representations.

Presentation, June 2023.

Max Planck Institute for Dynamics and Self-Organization | Prof. Priesemann Group Seminar

Learning personalized robot navigation from demonstrations using deep reinforcement learning.

Presentation, November 2022.

SEANavBench Workshop – ICRA 2022

Teaching Personalized Robot Navigation through Virtual Reality Demonstrations: A Learning Framework and User Study.

Poster Presentation, May 2022.

Projects

FOR 2535 – Anticipating Human Behavior

DFG Research Unit

Subproject P7 – Foresighted Robot Navigation Using Predicted Human Behavior

Waves to Weather

DFG Transregional Collaborative Research Center (SFB/TRR165)

Curriculum Vitae

| Research | |

|---|---|

| 2021 – current | Research associate at the Humanoid Robots Lab University of Bonn, Germany |

| 2020 – 2021 | Research associate in the transregional collaborative research project „Waves To Weather” (SFB/TRR165). Johannes Gutenberg-University, Mainz, Germany |

| 2018 – 2019 | Master’s thesis research project on subsampled spreading dynamics Max-Planck-Institute for Dynamics and Self-Organization, Goettingen, Germany |

| Education | |

|---|---|

| 2019 | Master of Science in Physics Georg-August-Universität, Göttingen, Germany |

| 2017 | Bachelor of Science in Physics Georg-August-Universität, Göttingen, Germany |

Last update: May 2023

Contact (Work)

Jorge de Heuvel

University of Bonn

Institute for Computer Science VI

Friedrich-Hirzebruch-Allee 8

53115 Bonn

Germany